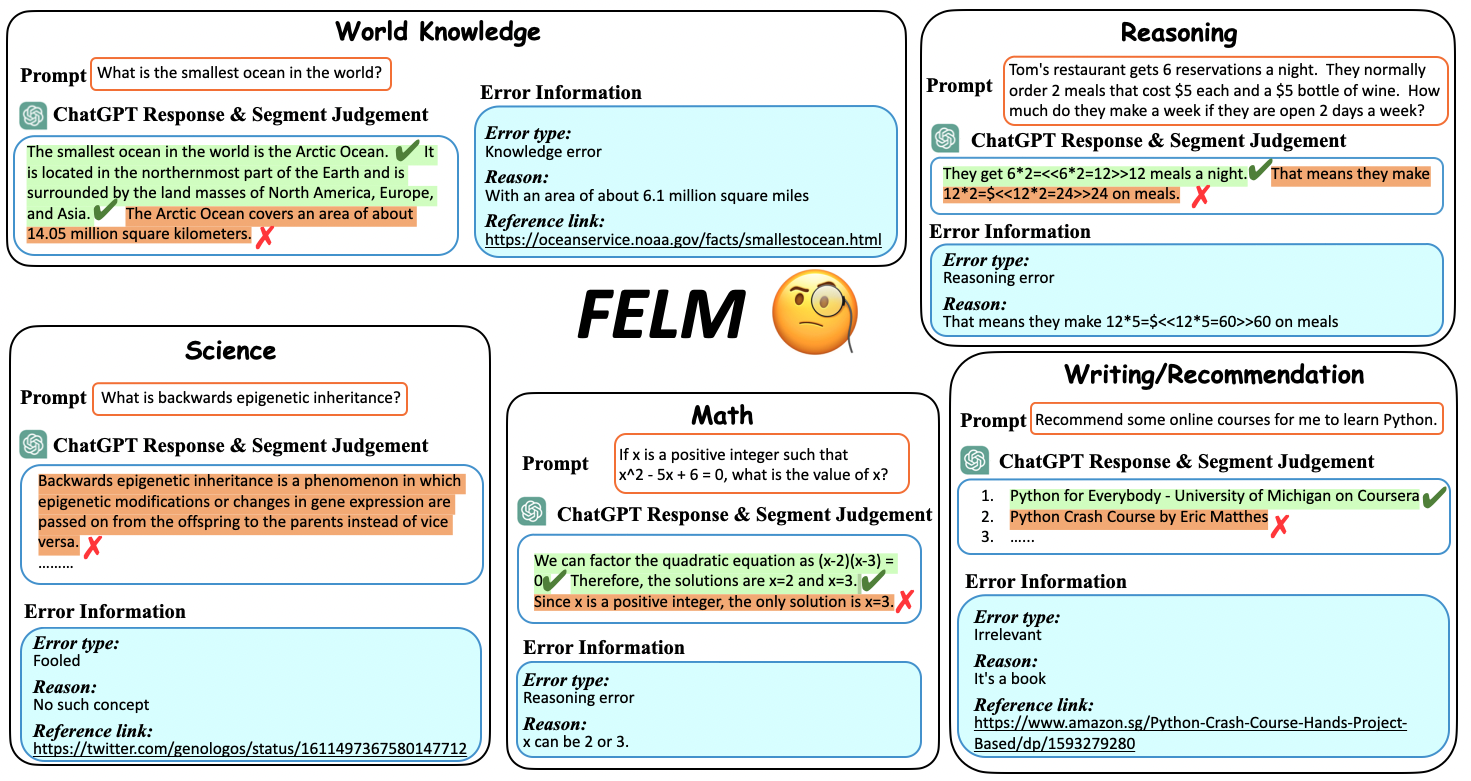

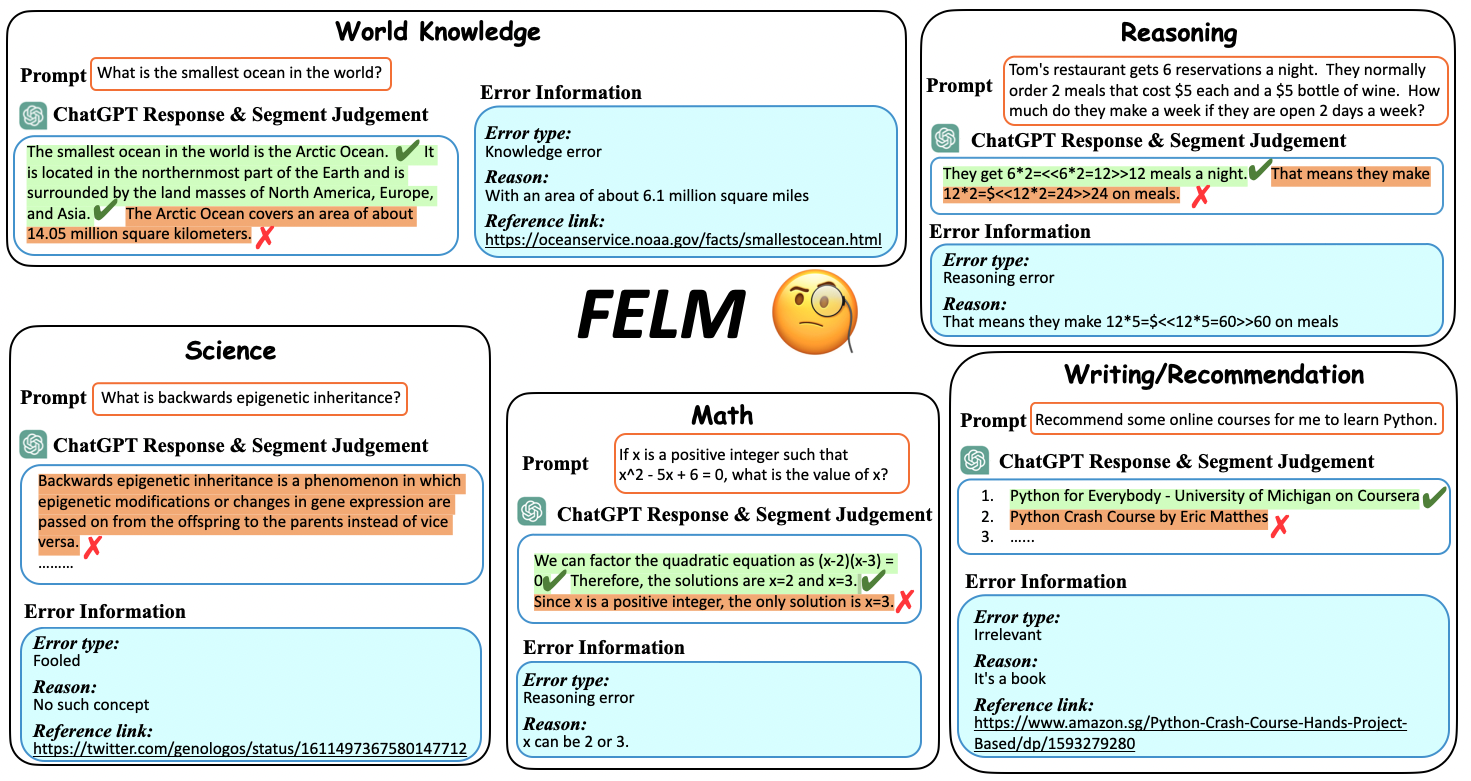

Examples from each domain in FELM

FELM is a meta benchmark to evaluate factuality evaluation benchmark for Large Language Models.

Assessing factuality of text generated by large language models (LLMs) is an emerging yet crucial research area, aimed at alerting users to potential errors and guiding the development of more reliable LLMs. Nonetheless, the evaluators assessing factuality necessitate suitable evaluation themselves to gauge progress and foster advancements. This direction remains under-explored, resulting in substantial impediments to the progress of factuality evaluators. To mitigate this issue, we introduce a benchmark for Factuality Evaluation of large Language Models, referred to as FELM. In this benchmark, we collect responses generated from LLMs and annotate factuality labels in a fine-grained manner.

FELM covers five distinct domains: World Knowledge, Science/Technology, Writing/Recommendation, Reasoning, and Math. We gather prompts corresponding to each domain by various sources including standard datasets like truthfulQA, online platforms like Github repositories, ChatGPT generation or drafted by authors. We then obtain responses from ChatGPT for these prompts.

| Category | Data |

|---|---|

| Number of Instances | 847 |

| Number of Fields | 5 |

| Labeled Classes | 2 |

| Number of Labels | 4427 |

| Statistic | All | world_knowledge | Reasoning | Math | Science/tech | Writing/Recommendation |

|---|---|---|---|---|---|---|

| Segments | 4427 | 532 | 1025 | 599 | 683 | 1588 |

| Positive segments | 3642 | 385 | 877 | 477 | 582 | 1321 |

| Negative segments | 785 | 147 | 148 | 122 | 101 | 267 |

F1 Score stands for the capability to detect the factual errors.

Balanced Accuracy stands for the balanced performance on correct and incorrect samples.

| Ranking | Model | F1 Score | Balanced Accuracy |

|---|---|---|---|

| 1 | GPT-4 | 48.3 | 67.1 |

| 2 | Vicuna-33B | 32.5 | 56.5 |

| 3 | ChatGPT | 25.5 | 55.9 |

@inproceedings{

chen2023felm,

title={FELM: Benchmarking Factuality Evaluation of Large Language Models},

author={Chen, Shiqi and Zhao, Yiran and Zhang, Jinghan and Chern, I-Chun and Gao, Siyang and Liu, Pengfei and He, Junxian},

booktitle={Thirty-seventh Conference on Neural Information Processing Systems Datasets and Benchmarks Track},

year={2023},

url={http://arxiv.org/abs/2310.00741}

}